Fellowship Assessment

Contact details

Australian College of Rural and Remote Medicine

Level 1, 324 Queen Street

GPO Box 2507

Brisbane QLD 4000

P: (+61) 7 3105 8200 or 1800 223 226

F: (+61) 7 3105 8299

E: assessment@acrrm.org.au

Website: www.acrrm.org.au

ABN: 12 078 081 848

Copyright

© 2021 Australian College of Rural and Remote Medicine. All rights reserved. No part of this document may be reproduced by any means or in any form without express permission in writing from the Australian College of Rural and Remote Medicine.

Version 2.5.2023

Date published: April 2023

Date implemented: April 2023

Date for review: December 2023

ACRRM acknowledges Australian Aboriginal People and Torres Strait Islander People as the first inhabitants of the nation. We respect the traditional owners of lands across Australia in which our members and staff work and live and pay respect to their elders past present and future.

The Australian College of Rural and Remote Medicine#

Introduction#

Philosophy of ACRRM Assessment#

The Australian College of Rural and remote Medicine (College) views assessment as an ongoing and integral part of learning. The assessment process has a purposeful developmental design, that assists learners in identifying and understanding their strengths and weaknesses and providing feedback for guidance of future development. The assessment program is designed to contribute to the development of lifelong learning practices and skills.

The College has developed, and delivers, the assessment program based on three key principles:

- Candidates can participate in assessment within the locality where they live and work, avoiding depopulating rural and remote Australia of their medical workforce (candidates and assessors) during assessments;

- The content of assessments is developed by clinically active rural and remote medical practitioners; and * Assessment plays a role in enabling candidates to become competent, confident and safe medical practitioners practising independently in their provision of health care to rural and remote individuals and communities.

Programmatic approach#

The College assessment process is designed using a programmatic approach. The programmatic approach allows the College to combine assessment methods with different psychometric properties, i ncluding workplace based and standardised assessments. For example, there is a balance between the clinical assessment in Structured Assessment using Multiple Patient Scenarios (StAMPS), which provides a highly structured and standardised approach, and the Case Based Discussion (CBD), which provides an assessment of the candidate’s clinical practice in the unique setting of their own clincial environment. Similarly, the Multi-Source Feedback (MSF) and the formative Mini-Clinical Evaluation Exercise (miniCEX) measure different aspects of the candidate's professional behaviour, one as perceived by patients and colleagues and the other through direct assessor observation.

Each assessment item has proven validity and reliability while assessing different aspects of the candidate’s skills, knowledge, and attitudes from different perspectives. The combination of approaches provides a more nuanced and detailed picture of a registrar’s development.

Each candidate is required to achieve a minimum of a pass grade in each of the summative assessment modalities. The combination of passing standard outcomes demonstrates that each candidate has requisite knowledge, skills and attitudes required for rural generalist practice as outlined in the ACRRM Rural Generalist Curriculum.

The combination of modalities ensures that each competency is assessed at least once during the training program. For example, professionalism is predominantly measured by the MSF assessment, while applied knowledge is predominantly measured by the Multi-Choice Question (MCQ) assessment.

Principles of Assessment#

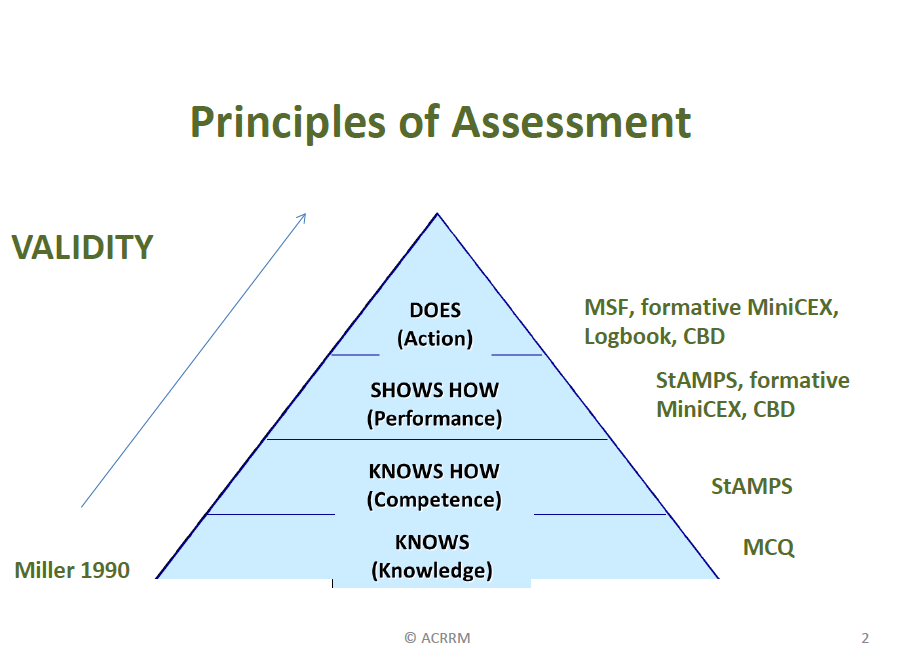

Miller (1990) introduced an important framework that can be presented as four tiers/levels of a pyramid to categorise the different levels at which trainees can be assessed throughout their training. Collectively, the College assessments embrace all four levels of Miller's Pyramid (Figure 1).

Miller emphasised that all four levels - knows, knows how, shows how and does – are required to be assessed to obtain a comprehensive understanding of a trainee’s ability.

Does: Performance integrated into practice \ Shows how: Simulated demonstration of skills in an examination situation\ Knows how: Application of knowledge to medically relevant situations\ Knows: Knowledge or information that the candidate has learned

Examples of the assessment tools at each level of College assessments are:

Does (Action): MSF, formative MiniCEX, Logbook & CBD\ Shows How (Performance): StAMPS, formative MiniCEX, CBD\ Knows How (Competence): StAMPS \ Knows (Knowledge): MCQs

Figure 1: Miller G 1990 The Assessment Clinical Skills/Competence/Performance

Assessment blueprint#

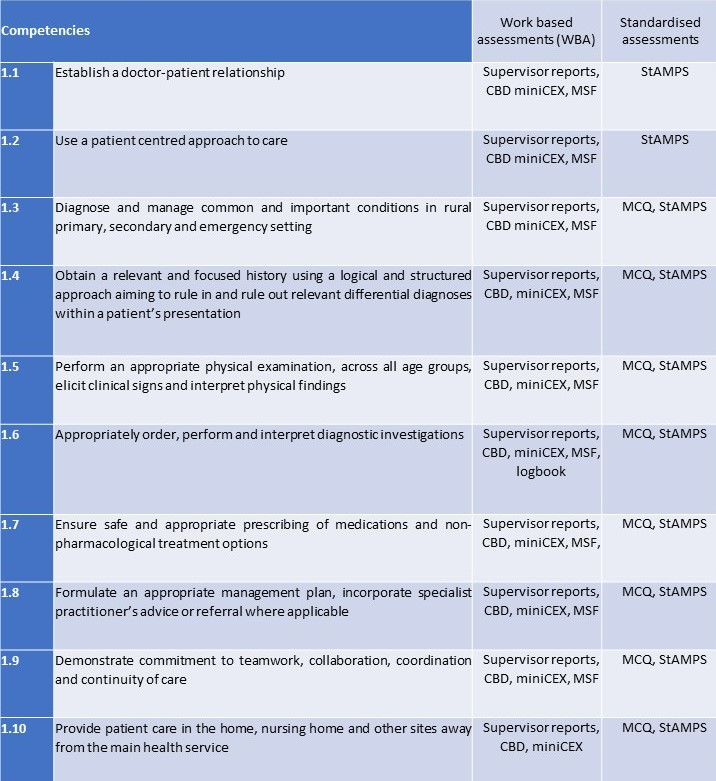

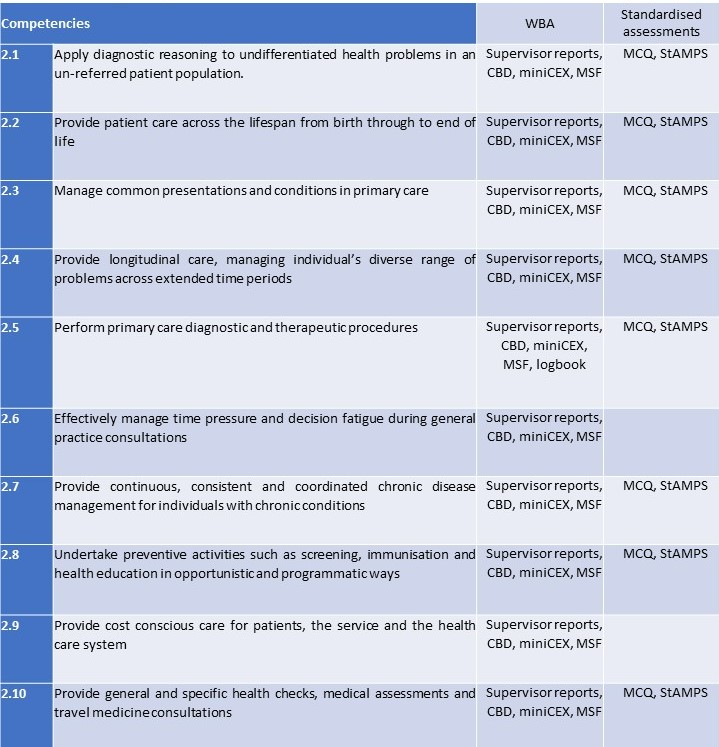

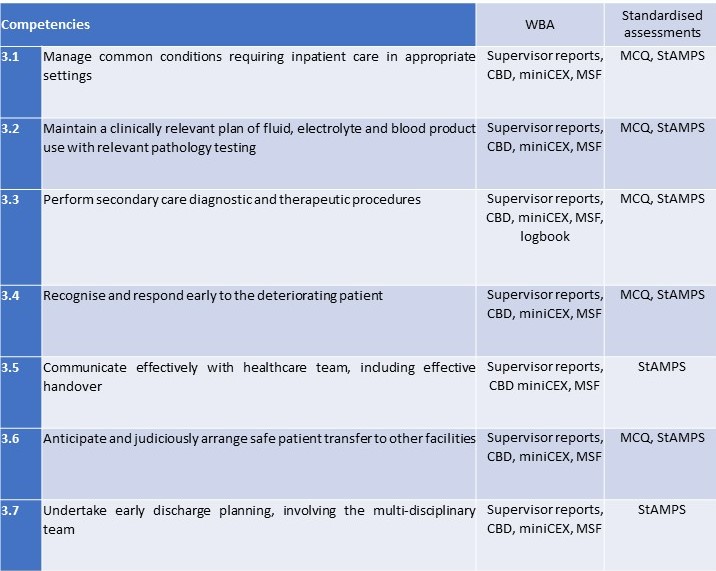

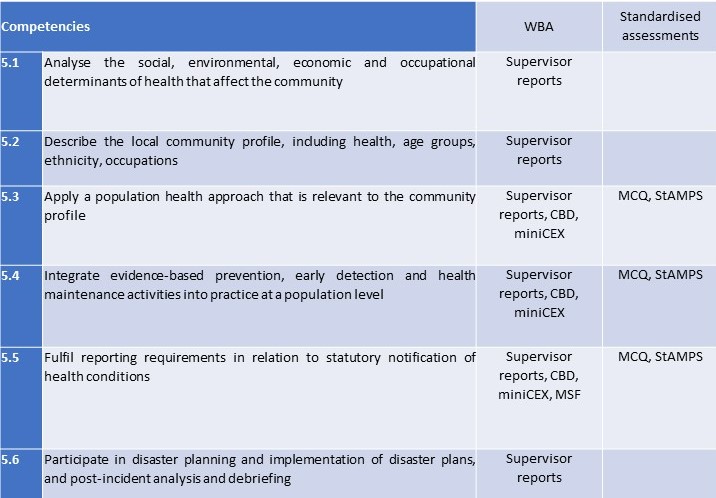

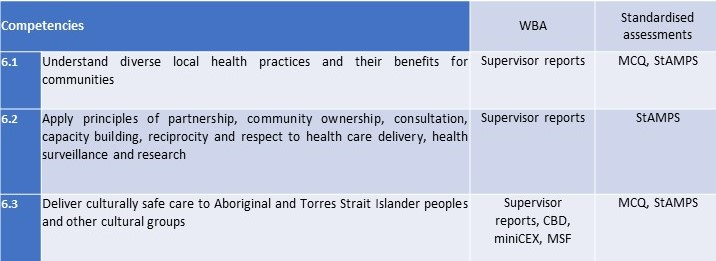

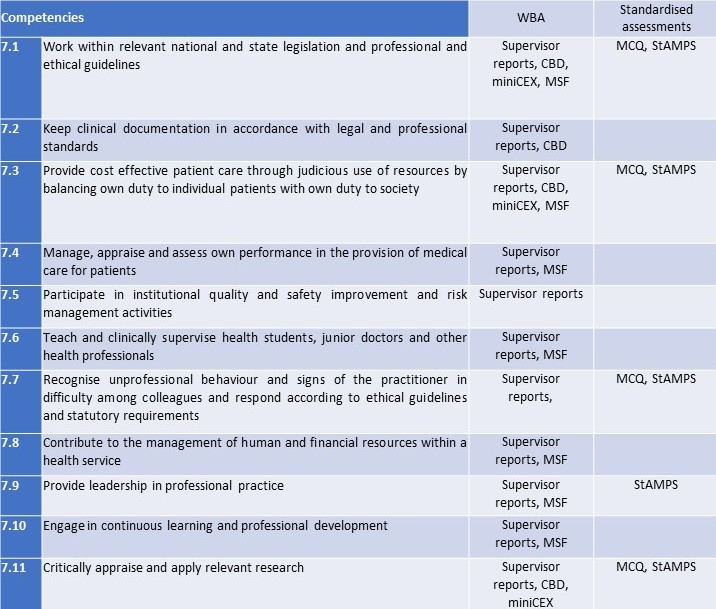

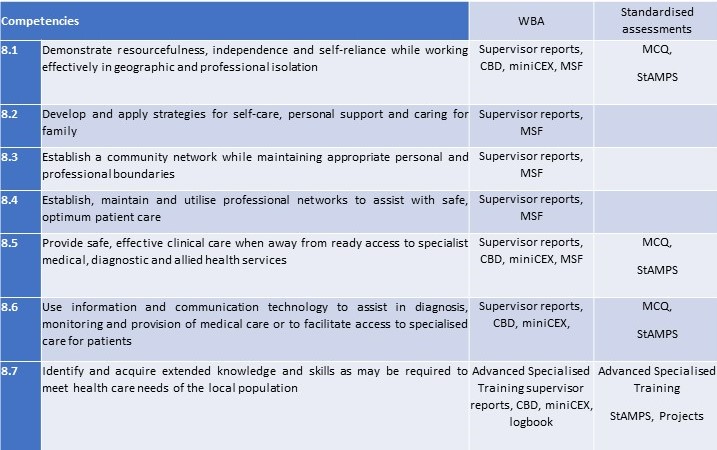

The assessment program has been developed around the Rural Generalist Curriculum competencies under the eight domain of rural practice.

Domain 1 - Provide expert medical care in all rural contexts

Domain 2 - Provide primary care

Domain 3 - Provide secondary medical care

Domain 4 - Respond to medical emergencies

Domain 5 - Apply a population health approach

Domain 6 - Work with Aboriginal, Torres Strait Islander and other culturally diverse communities to improve health and wellbeing

Domain 7 - Practise medicine with an ethical, intellectual and professional framework

Domain 8 - Provide safe medical care while working in geographic and professional isolation

Examiners, Assessors and Item Writers#

The College has a team of writers, editors and assessors. The College aims to include as broad as possible representation of geographic and demographic membership in the team. Core Generalist Training assessment team members are required to be experienced rural practitioners who hold FACRRM. Advanced Specialised Training assessment team members are comprised of a combination of doctors holding Fellowship of ACRRM (FACRRM) and Fellows of other relevant specialist medical colleges.

All College Assessors undergo training throughout their time in the role of Assessor.

The “expert team” developing assessment items for MCQ and StAMPS has input from a larger group of practising rural doctors.

The College uses several processes to evaluate the effectiveness of the Fellows who contribute to assessment modalities. Post-assessment feedback from candidates, assessors, invigilators and others involved in assessment is evaluated routinely after each assessment. This information is reviewed by the lead assessors and fed back to the assessors and/or writers as appropriate.

Code of Conduct#

The College has an Examiner Charter and Code of Conduct that outlines the examiner's roles and responsibilities. The Code of Conduct is available on the College website here.

Conflict of interest#

Examiners are required to declare a conflict of interest with any candidate they are assigned to assess prior to the assessment taking place. A declared conflict of interest will be taken into consideration and addressed accordingly. In CBD registrars are made aware of who will be assessing them in advance and are able to notify the College of any conflict of interest.

Quality assurance processes#

A range of quality assurance processes are used by the College's assessment program.

The College has a documented process based on best practice for standard setting and definition of the cut-off point between a pass and fail in each of the summative assessment modalities. These are described in the chapters relating to each modality.

Following an assessment, standard question reliability statistics such as Cronbach’s alpha are considered, with reliable questions/items placed in the repository for future assessments or to be included in publicly released practice assessments. Those with poor reliability are redeveloped or retired.

When StAMPS is delivered across multiple sites, the Assessors assessing the same scenario attend a moderator session together with the lead Assessor to facilitate consistent delivery and marking of the scenario. At each StAMPS assessment centre there is a lead Assessor to ensure that the assessment is delivered in a fair and consistent manner and that process has been adhered to. The lead Assessor will observe Assessors across the assessment session and replace the Assessor when a conflict of interest has been declared. The lead Assessor is also responsible for providing feedback to Assessors. At the conclusion of the StAMPS all Assessors attend a debriefing session which includes an opportunity for Assessors to calibrate their marking.

As a standard part of College assessment processes, assessments may be recorded. Candidates are notified of when this is occurring and continued participation in the assessment is considered consent to the recording. These recordings are used for the purpose of quality assurance and are the property of the College. Recordings are not made available to candidates or medical educators. Retention of recordings is managed in line with the College's document management policy.

The College formally evaluates the validity and reliability of each assessment modality prior to finalising results. Formal statistical testing is completed after each MCQ and StAMPS assessment to identify any discrepancies that may suggest the assessment was unfair for all or some candidates. This analysis includes performance breakdown of each StAMPS scenario for each day as well as Examiner grading and candidate cohort analysis. The CBD assessment is formally analysed through regular year-round session reviews and statistical analysis at the end of each year.

The College conducts ongoing evaluation of the assessment process to ensure fairness and equity for all participants. After each assessment, candidates, invigilators, examiners and staff are invited to provide feedback via an anonymous online survey. The College has introduced a continuous quality assurance process for all assessments which is reported to the Assessment Committee.

The results of these processes feed directly back to the Assessment team, informing policy and procedure and contribute to the ongoing development and refinement of all processes. This process also provides a formal route to inform the Training program about the educational impact of the assessment modalities.

The Assessment Committee provides oversight of all aspects of the assessment process. This duly constituted Committee reports to the Education Council.

Candidate assessment rules#

The following rules apply to a:ll College assessments:

- Candidates must arrive at the approved venue at the time specified in the instructions to candidates that is provided prior to the assessment date.

- Candidates must provide valid photographic identification (e.g. driver’s licence or passport) to the invigilator for verification of identity.

- Food or drink is not permitted into the assessment room other than a clear plastic bottle of water or food required for medical reasons.

- Any item that has not been authorised by the College is not permitted to be taken into the assessment room by a candidate.

- Mobile phones and all other electronic devices, including but not limited to, smart watched and wireless communication devices must be turned off and left with the invigilator.

- Candidates are strictly prohibited from accessing any component and function of the computer, email or internet sites, other than the platform used for the conduct of the examination.

- Candidates must be accompanied by their invigilator or authorised person if a restroom break is required.

- At the conclusion of the exam, all hand-written notes and exam material must remain in the exam room with the invigilator who will immediately return to them to the College.

- Candidates must not share any information related to the content of the assessment and this is a serious breach of the College's Academic Code of Conduct. Any breach or alleged breach will be dealt with in accordance with this policy.

Venues and Invigilators#

All candidates should refer to the relevant Assessment Venue Requirements when choosing a venue and invigilator. A candidate should seek a venue and invigilator as soon as they have enrolled for an assessment.

Venue#

A candidate is required to undertake their assessment at a venue that has been approved by the Assessment team as outlined in the relevant Assessment Venue Requirements document available on the College website. It is the candidate’s responsibility as indicated in the venue requirements are met.

The use of a private residence is only permitted for Mock StAMPS and CBD, or in the event a chosen/approved venue becomes inaccessible/unavailable due to a natural disaster. In this situation, a candidate who has been affected must obtain approval from the Assessment team.

The candidate must ensure that the room being used for the assessment does not contain any medical reference materials or other items as indicated in the section relevant to candidate assessment rules on page 12.

Invigilators#

Invigilators play a central role in ensuring the security of the assessments for all StAMPS and the MCQ assessments. Candidates are responsible for finding a suitable invigilator and will be unable to undertake the assessment without an appropriate invigilator.

All invigilators are subject to approval by the Assessment team, who has the discretionary authority to approve or decline the nominated invigilator. If a nominated invigilator is deemed unsuitable for any reason, the candidate will be notified and required to nominate a replacement invigilator. Invigilators are paid by the College.

Incidents or irregularities#

A candidate or assessor who has a concern about the management or conduct of the assessment should complete an Incident Report. An Incident Report must be submitted to the Assessment team within 48 hours of the conclusion of the assessment.

Examples of incidents or other irregularities include but not limited to:

- An uncooperative candidate or Examiner

- Assessment procedures not followed

- Not following the assessment procedure

- Disturbances (e.g. unexpected noisy consulting room, fire alarms/drills)

- Unauthorised persons entering the assessment room

- Unauthorised material in the assessment room

- Technical disruptions (e.g. loss of power or internet)

- Unforeseen emergency evacuations.

In the event of an unforeseen emergency evacuation, the Invigilator must:

- Contact the Assessment team immediately or as soon as practicable;

- Collect all the assessment material from the candidate and evacuate with the candidate as per the venue's emergency evacuation procedures;

- Remain with the candidate at all times during the duration of the evacuation;

- If possible, maintain contact with the Assessment team throughout the evacuation; and

- Follow the venue's emergency evacuation orders and return to the assessment room/venue only when safe to do so and as instructed by the venue's emergency contacts or authorities.

Any time lost as a result of an emergency evacuation will be awarded to the assessment to allow the candidate to continue and complete the assessment if an when safe to do so on the same day. Depending on the duration of the evacuation, the candidate's assessment may be rescheduled to another date.

Results#

Following the completion of all post assessment quality assurance processes, a recommendation is presented to the ACRRM Board of Examiners (BoE). The BoE meets on a regular basis and is responsible for the ratification of results. Candidates are provided with an outcome letter and feedback report (if applicable) shortly after the ratification of results by the BoE.

Once available, results are uploaded to the “My Documents” section of a candidate’s “My College” portal, accessible via the College website. Candidates will receive an email notification once results are uploaded.

The dates for release of results are published for each assessment on the Assessment, date and enrolments and fees webpage.

Public assessment reports#

The public report provides assessment statistics, a description of the scenarios/questions, feedback from the Principal Assessor and a summary of stakeholder feedback and improvements planned. The Assessment Public Reports are published on Assessment resources webpage.

A public report is published by the College website following each Core Generalist Training (CGT), Advanced Specialty Training (AST) Emergency Medicine (EM) StAMPS and MCQ assessment. CBD public reports are published annually.

Undertaking Assessments Overseas#

The College has provisions in place for candidates who will be overseas at the time of their assessment. Candidates who wish to undertake an assessment overseas must contact the Assessment team as soon as practicable for further advice before finalising their enrolment.

The MCQ and StAMPS assessments can be completed overseas, subject to appropriate invigilation and technical requirements being met. See Assessment Venue Requirements for further information. Mock StAMPS can be completed from overseas from a suitable venue and technical requirements must be met.

The MSF, CBD and formative miniCEX can only be undertaken overseas if in an approved overseas training placement. Refer to the Overseas Training Placements policy.